International Day of Families at HENSOLDT Cyber

International Day of Families at HENSOLDT Cyber Interview with Markus Wolf, Sascha Fedderwitz, Emilia Fries We asked some of our colleagues a few questions on

Written by Maja Malenko, Hardware Researcher at HENSOLDT Cyber

In the final blog post of our hardware security trilogy, we discuss countermeasures against the attacks mentioned in part two.

Attacks in the IC Supply Chain

The Integrated Circuit (IC) supply chain is truly globalized. In order to reduce costs, a significant number of design houses have become fabless and outsource their production to external foundries.

Reducing costs reduces trust in the manufacturing process. As a consequence, design houses can no longer control how their designs are used. Foundries can make extra copies of an IC, steal information, or add hardware Trojans. Consequently, life-critical systems and infrastructures are exposed to security and reliability risks, while design houses suffer severe revenue losses. Therefore, in a distributed and untrustworthy supply chain, it becomes critical to protect IC designs. We will briefly introduce the most prominent threats and countermeasures associated with untrustworthy foundries.

The practice of selling illegal copies of an IC is known as IC piracy. A foundry may also resell a modified design after reverse engineering it. IC watermarking is a passive technique for proving the authorship of the IC. However, watermarking does not prevent its misuse.

A dishonest foundry may produce more chips than it is contractually required to produce. Overproduced components may be sold without proper testing, raising concerns about the safety and reliability of critical products. Hardware metering is a method that gives IC designers control over the number of manufactured ICs.

The global chip shortage has raised concerns about the increased use of counterfeit ICs. They have been a long-standing problem impacting governments, industries, and society. Counterfeit ICs appear as re-marked, restored, or empty packages and can be detected physically and electrically.

In the previous blog post, we gave a brief introduction to reverse engineering. Reverse engineering is critical to carry out many of the attacks in the IC supply chain, especially the injection of meaningful hardware Trojans. A design that is difficult to reverse engineer may prove effective in preventing such attacks. Hardware obfuscation, in turn, is an effective method to prevent reverse engineering.

Countermeasures against Reverse Engineering

Hardware obfuscation and split manufacturing are two of the most commonly used countermeasures against reverse engineering and malicious hardware modification. They are a significant step towards trustworthy electronics, bringing the control of the supply chain back to the designer.

Hardware Obfuscation

Hardware obfuscation refers to the process of modifying the design to make its functionality incomprehensible to attackers. It comes in two forms, IC camouflaging and logic locking.

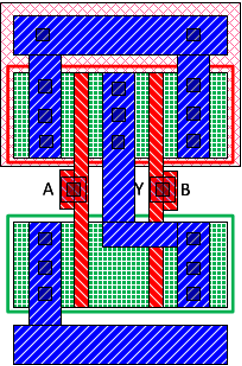

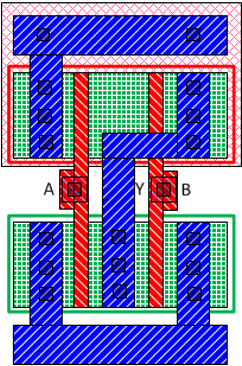

IC camouflaging hides the Boolean functionality of selected gates so that an attacker cannot infer the exact functionality of the netlist while performing IC extraction attacks. Logic locking, on the other hand, obscures the functionality of the design by adding programmable logic to the netlist. Figure 1 shows an example of camouflaging 2-input NAND and NOR gates. As their layouts are identical, it is not easy to distinguish them merely by examining the top metal layer. A reverse engineered netlist may differ from the original if the attacker interprets the camouflaged gates incorrectly.

a

b

c

d

Figure 1: An example of IC camouflaging: standard cell layout of regular 2-input a) NAND and b) NOR gates and camouflaged c) NAND and d) NOR gates [1]

Logic locking obscures the functionality of the design by adding programmable key-controlled gates, known as key-gates. Only with the correct key-input, provided post-fabrication, the obfuscated circuit is functionally equivalent to the original one and behaves properly. Any other key-input will change the circuit’s original functionality, inferring an unusable chip.

Depending on the design stage at which obfuscation is performed, logic locking approaches can be classified into pre-synthesis logic locking, enforced at register-transfer level (RTL), and post-synthesis logic locking, enforced at netlist level. Depending on the type of circuit, which is obfuscated, logic locking techniques can be combinational or sequential. Combinational logic locking is performed by inserting different types of key-gates, (i.e., XOR/XNOR, MUX, LUT) in selected positions in the circuit. Sequential or FSM logic locking is performed by integrating additional states into the state transition graph.

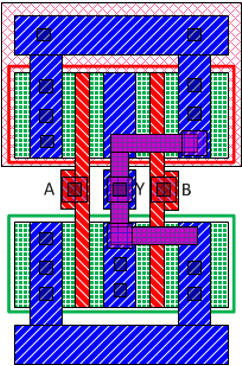

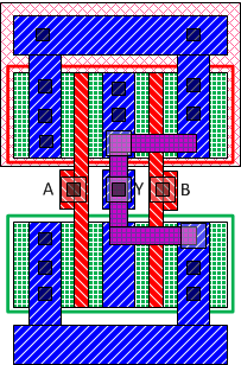

Figure 2 shows a simplified example of a randomly locked half adder module. The combinational locked circuit on the right is identical to the original circuit on the left, as long as the key-input k to the XOR key-gate has the value 0.

Figure 2: An example of logic locking a gate-level netlist of a half adder module

Figure 3 illustrates an IC design flow where logic locking is performed on the synthesized gate-level netlist. The locked design is then sent to the foundry. After fabrication, locked chips are brought back to the design house to be activated.

Figure 3: An example of an IC supply chain that integrates logic locking

Logic locking is a research area that has existed for only over a decade. Even so, it has made outstanding progress during the past several years. As a result, various defences and attacks have evolved. EPIC [2] is the first successful logic locking approach. It implements a combinational logic locking with a public-key cryptography framework for secure key distribution. This method is known as random logic locking due to the random placement of XOR/XNOR key gates.

Logic locking techniques have been subject to numerous attacks. It is given that the foundry can obtain the locked netlist, by reverse engineering the layout. The second requirement in the oracle-guided threat model is a functional IC or any other form of working logic (i.e., an oracle), on which correct output values can be observed for different input patterns. An example of a brute force attack would try all possible key combinations on the locked design and compare the output values with the oracle’s output values. For a key size of N bits, an attacker would need to try 2^N possible key values. Key size affects the difficulty of discovering the key, similarly to conventional encryption. If the key size is sufficiently large (e.g. 128 bits), it becomes computationally infeasible to determine the key. Random logic locking is susceptible to a Boolean satisfiability solver (SAT)-based attack [3], which minimizes the key search space and can determine the correct key in a timely manner. As with every other security-related topic, there has been a constant cat-and-mouse game between logic locking defences and attacks for the last decade.

Split manufacturing

Split manufacturing divides a design into Front End Of Line (FEOL) and Back End Of Line (BEOL) layers. Both are fabricated independently and then aligned using electrical, mechanical, or optical alignment techniques. Split manufacturing hides the BEOL connections from untrustworthy foundries. Figure 4 shows an example of split manufacturing IC design flow, as presented in [4].

Figure 4: Split manufacturing design flow [2]

Countermeasures against Fault Injection Attacks

In our previous blog post, we have seen that faults can be injected using various techniques. Conditional branch instructions, for example, can be changed or skipped with single instruction fault (i.e., single glitching). As a result, fault injection attacks (FIAs) could effectively bypass the security mechanisms built into the secure boot, placing devices or systems at risk.

Countermeasures against FIAs can be implemented both in software and in hardware. These mechanisms are rooted in fault-tolerant computing and are used in safety-critical systems. Most commonly, they leverage redundancy in software or hardware computations.

Software countermeasures are based on several fault-safe principles: duplicating computations by introducing redundant conditional checks, redundant memory accesses on critical values, avoiding fail-open scenarios, etc. The probability of successfully injecting multiple faults in consecutive instructions is much lower than a single glitch. Therefore, redundancy checks are an effective countermeasure against fault injection attacks.

Last time, we mentioned that a common FIA on secure boot bypasses the signature verification. When the system enters an insecure state due to an error or a glitch, it is called a fail-open system. Fault-resistant code must never fail-open. Instead, in case of a fault, it must fall back to a secure state, i.e., it must fail-closed.

Figure 5 illustrates one approach to avoid fail-open conditions by defaulting to a closed state. The source code segment in figure 5a and its corresponding assembly in figure 5b demonstrate a poor example of a fail-open system. By skipping the conditional branch instruction (bne on line 2 in figure 5b), the system enters an insecure state and could possibly boot malicious software even when the signature verification fails. Alternatively, the source code segment in figure 5c and its corresponding assembly figure 5d illustrate a default fail-closed scenario where the system remains secure even if the conditional branch instruction (beq on line 2 in figure 5d) is skipped.

int result = verifySignature();

if(!result)

boot();

else

error();

a

call verifySignature

bne a0, zero, labelError

j labelBoot

labelBoot:

call boot

j cont

labelError:

call error

j cont

cont:

b

int result = verifySignature();

if(result)

error();

else

boot();

c

call verifySignature

beq a0, zero, labelBoot

j labelError

labelError:

call error

j cont

labelBoot:

call boot

j cont

cont:

d

Figure 5: An example of defaulting to a closed state

The use of redundant checks can be used to reinforce protection against FIAs. It is possible to rewrite the code in figure 5a using the logical AND operator in order to guard the open case, as shown in figure 6a. Based on the corresponding assembly in figure 6b, it is evident that all three branch instructions (lines 2, 5, 8) must be skipped in order for the system to enter an open state and perform an illegal boot. Software-based countermeasures exist in various forms, some of which are much more effective than the examples presented here.

#define MULTI_IF_FAILOUT(condition) \

if ((condition) && (condition) && (condition))

int result = verifySignature();

MULTI_IF_FAILOUT(!result)

boot();

else

error();

a

call verifySignature

bne a0, zero, labelError

j labelSafe1

labelSafe1:

bne a0, zero, labelError

j labelSafe2

labelSafe2:

bne a0, zero, labelError

j labelBoot

labelError:

call error

j cont

labelBoot:

call boot

j cont

cont:

b

Figure 6: An example of using redundant checks to guard an open state

Hardware countermeasures against fault injection attacks can also be implemented using lockstep processors, which provide redundancy through hardware. In these processors, the main core performs the computations, while a secondary core (in dual-core lockstep) duplicates the computation and compares the results. Consequently, in triple core lockstep, a fault is detected using a majority vote mechanism.

Countermeasures based on both software and hardware have their advantages and disadvantages. Selecting the appropriate countermeasure requires considering the desired trade-off between security, overhead, performance, and convenience.

This is the final blog post in our hardware security trilogy. Although we have only scratched the surface of this topic, we hope you found it informative, and it sparked your interest in hardware security.

[1] J. Rajendran, O. Sinanoglu, R. Karri. “VLSI testing based security metric for IC camouflaging”. ITC 2013.

[2] J. A. Roy, F. Koushanfar, I. L. Markov. “EPIC: Ending Piracy of Integrated Circuits”. DATE 2008.

[3] P. Subramanyan, S. Ray, S. Malik. “Evaluating the security of logic encryption algorithms”. HOST 2015.

[4] J. Rajendran, O. Sinanoglu, R. Karri. “Is split manufacturing secure?”. DATE 2013.

International Day of Families at HENSOLDT Cyber Interview with Markus Wolf, Sascha Fedderwitz, Emilia Fries We asked some of our colleagues a few questions on

How to mitigate hardware attacks? Written by Maja Malenko, Hardware Researcher at HENSOLDT Cyber In the final blog post of our hardware security trilogy, we

Is Hardware Reverse Engineering a Real Threat? Written by Maja Malenko, Hardware Researcher at HENSOLDT Cyber Today we are continuing the story of hardware security

Hardware Security: HENSOLDT Cyber’s MITHRIL Project Written by Maja Malenko, Hardware Researcher at HENSOLDT Cyber Creating secure hardware is a challenging task, especially in times

Successful ISO 9001:2015 certification We are pleased to announce that after eight months of hard work, preparation, and intensive teamwork, HENSOLDT Cyber has certified its

Hello Secure World – How to build and run your first secure TRENTOS® system Building on our first video session in which we took a

LEGAL:

CONTACT US:

FOLLOW US ON:

HEADQUARTER POSTAL ADDRESS:

OUR OFFICE IS LOCATED HERE: